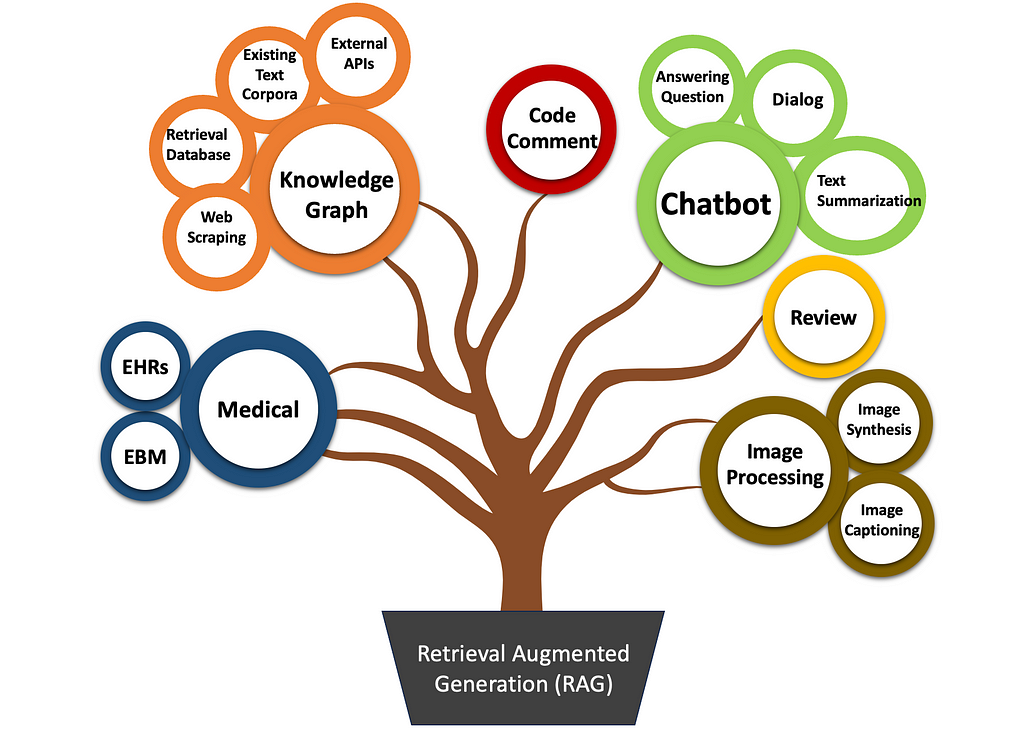

A Unified and Collaborative Framework for LLM Author · Qingqin Fang ( ORCID: 0009–0003–5348–4264) Introduction In today’s rapidly evolving field of artificial intelligence, large language models (LLMs) are demonstrating unprecedented potential. Particularly, the Retrieval-Augmented Generation (RAG) architecture has become a hot topic in AI technology due to its unique technical capabilities.